In my previous post I offloaded some of my ideas about setting up one’s module development project. In this instalment I’ll be looking at another aspect of module development: the data layer. Again, these hobbyhorses are not meant to be normative. What you do is up to you. But just like last time most of these points are informed by what I’ve seen posted on module development until now and my opinion that I think many of these guides are great for a one off/small module projects but lack some tricks that allow you to make more stable modules delivered to a larger audience.

Hi, my name is Peter Donker and I lovez DotNetNuke. I run Bring2mind which is responsible for the Document Exchange module. I’ve been active in the DotNetNuke ecosystem since 2003.

Part 2. The Data Layer

General Design

Commonly (as of this writing and as since DNN’s inception) we chop our application up into several layers to increase robustness, maintainability and general sanity. And commonly the “bottom” layer is the data layer: the layer of your app that stores and maintains data. “Ah”, you say, “SQL”. Yes, SQL. But a touch more as well. The design pattern used by DotNetNuke and most modules is a so-called data provider consisting of an abstract DataProvider class and an implementation in an SqlDataProvider class. The latter holds the references to SQL Server and knows which stored procedures to call. This then provokes the code in SQL server to do its magic. So in this layer we are looking at the following:

- The abstract DataProvider class

- The SqlDataProvider class

- The SqlDataProvider scripts that you distribute with the module

The use of the provider pattern is so we can switch out SQL Server for another database technology. This may come as a surprise, but you could make DNN work on MySQL, Oracle, or any other relational database. In practice this is a lot of work, though, and you need to create the implementation of the DataProvider class for each and every module you have on the system. Not a trivial task.

There are alternatives to the pattern for your module. You could collapse the first two and just do away with the provider pattern. Fair enough. I’m not aware of a significant market for anything else than SQL Server. Alternatively, there has been a lot of attention for Entity Framework. The downside is that it doesn’t support DNN’s objectQualifier (more about that later). So for a module for a general audience that doesn’t fly! So my advice is: stay with the herd and do the grunt work of the data provider.

If you’re like me you often begin with the database. I usually begin my conception of the model there. I know there are development methods that claim it’s better to use method XYZ which starts somewhere else. But this has worked quite well for me over the years. Object-properties go straight to the data. So I’m first going to look at the database and more specifically the creation of the scripts that you distribute.

SQL Scripts

SQL scripts are something of an oddity in your project. They are “code” in the same way as your C#/VB code is “code”. I.e. if there is a syntax error, things will go South. But the scripts themselves are not subject to Visual Studio’s error highlighting. Instead they are “dumb text files”. The actual instantiation in SQL can be seen in SQL Management Studio (which you’ll commonly have running alongside Visual Studio), but that is far from an IDE of the likes of VS. So we need to keep in mind that the scripts are more prone to our own errors than the rest of our work. So what you really need is a rigorous method to deal with scripts. This is not trivial, but it becomes exponentially more important when working in a team. I’ve just worked on the Blog module and have had to bridge two different ways of working with scripts. I can tell you: I spent many hours making sure the scripts were good. For what it’s worth I will explain what my method is for generating scripts. But first a few words about the three script types.

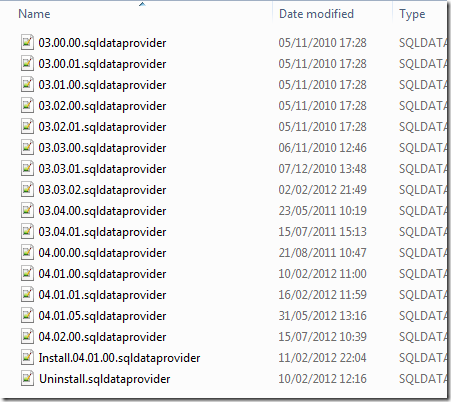

Install, Upgrade, and UnInstall

There are three types of script: an install script, an upgrade script and an uninstall script. To understand what these are and how to use them we need to understand the installer. The installer does (among many other things) 2 things of importance here: (1) it reads the version of your module from the manifest and (2) it checks to see if that module is already in the system and gets the existing version nr. So we have two version nrs: new and existing. If existing is null, then it is a new install. So what happens on a new install? Well, the script processor looks for a file with the pattern Install.xx.yy.zz.SqlDataProvider. This is the “Install Script”. If found it will run that and remember the version nr (i.e. the xx.yy.zz). After that it will run all (upgrade) scripts starting with a higher number than xx.yy.zz. Note: if there is no install script it will begin running all scripts from the start. The install script is not mandatory but it’s quite handy as we’ll discover. And also note there can be only one install script!

One thing you may need to do is to tie your data to DNN’s data. Let’s say you have data that is tied to the module instance, then at some point you’ll have a foreign key on the Modules table to ModuleID. With a CASCADE DELETE to make sure that your data gets removed if the user deletes the module. This is common practice. But what if DNN decides to change the modules table? Well, then most of us will need to scramble and change our code. Luckily they will probably never touch the really basic data bits like ModuleID, PortalID and UserID. But you may have tied to something more obscure. So what do you need to do if that has changed? Well, first you’ll need to generate an upgrade script of course. This will make sure any existing users will get upgraded to the new situation. But there is a hidden snag here. Your older scripts would still tie in to the deprecated DNN model. And any user installing the module for the first time in a new DNN installation will get a big fat error upon install (“could not find column XYZ …”). So what you do at this point is to create an Install script that is “fresh” so all new installs have no issue whatsoever. Finally: depending on your install base you may need to edit older upgrade scripts to make sure they don’t generate errors (this violates one of my rules to never touch upgrade scripts, by the way). But most often the new Install script will be enough. This is one advantage of the install script.

Now, what happens when the existing version is not null? Well, then it’s an upgrade and all scripts beginning with a number higher than the install version will be run. These scripts have the pattern xx.yy.zz.SqlDataProvider. They are incremental scripts in that they can assume that all scripts before them ran. These are the “Upgrade scripts”. Note that there is one special case: release 1.0.0. The very first script (whether it is distributed as an upgrade or an install script) is in essence and install script.

The final script is the “Uninstall Script” called UnInstall.SqlDataProvider. There is just one of these and the name is fixed. It is run upon uninstall and should rip out everything you’ve added/changed from the database. No ifs or buts. Everything. Why? Well, because the user may install again. And if you didn’t remove everything the installer may well throw an error upon second install. Which brings me to another point.

Errors and install hell

One unfortunate aspect of our world is that any error in script processing upon install leads to the installer failing the entire process! This means the user sees an error on screen and doesn’t get your module installed. But … some scripts may have run. If it’s a new install the damage is usually limited as you can rip out all the bits and pieces that have been installed and start all over. But if it’s an upgrade this can be catastrophic. It is a flaw in the DotNetNuke framework and more specifically the installer and to this date we haven’t found a good solution to this issue (in my installation instructions I tell users to backup before installing an upgrade and to roll back if anything went wrong). The only take away from this is: make sure your upgrade scripts are generously littered with protective code constructs. E.g. “IF EXISTS(my new stored procedure) THEN (rip it out first)” before you create a procedure. Or “IF NOT EXISTS(some column on my table) THEN ALTER(that table to add that column)”. It’s not that much work and avoids one source of errors.

ObjectQualifier

Surely one of the first headscratchers when you get into module programming is the objectQualifier. If you’re (relatively) new to DNN you’ve probably wondered “WTF is that?” A little background then. This dates back to the early days of DNN when hosting was expensive (well, it’s still not cheap if you want good hosting) and databases added significant cost (that has changed somewhat). So the idea was this: you could cram multiple DNN installations into a single database. Or: you could host more than one DNN installation with a single database. The way that would work is by prefixing everything with a blurb that is unique to the installation: the objectQualifier. Since that time quite a bit has changed. I’m not aware of anyone actually trying to host multiple DNN installations with a single database. But the objectQualifier has been used in a different way: as an obfuscation mechanism. If you’d have the ability to launch an SQL injection attack on a site, wouldn’t it be a comforting thought that “DELETE FROM Users” will no longer work? I for one have used objectQualifier in this way on several occasions. Then as module developers we now have to realize one more thing: once you’ve set the objectQualifier there is no going back. And believe me, behind the scenes many have given this careful thought as there would be a big benefit to rip it out as well (among others the ability to use Entity Framework). But believe me: as things stand it is impossible and it’s not going to happen. We are stuck with objectQualifier forever. Repeat after me … thou shalt include the objectQualifier in thy scripts.

Anyway: the practical consequence is that you will need to factor it in your code. So the first thing to do is to make sure you’re running your dev environment with an objectQualifier. This has the following two benefits:

- During testing you’ll immediately spot any errors.

- In the generation of scripts you can do a simple search and replace to get the objectQualifiers stuck in the right place (this is why you should use an objectQualifier that is “weird” and won’t occur naturally elsewhere).

DatabaseOwner

Again this is mandatory. You’ll be familiar with the term and will probably have noticed that “dbo.” works just as well. Well, yes. In most cases. Just not in all. Some customers have a different database owner set up for their DNN and then all your SQL goes to junk if you’ve been using dbo. So use “{databaseOwner}”. When to add it? It goes just about everywhere where you see {objectQualifier}. If in doubt: add it. The only place it doesn’t go is before Indexes and Keys as far as I can tell.

Azure

This has now become part of our lives. To keep things simple the code for successful Azure SQL installation is a subset of what we’re used to with SQL 2008. So some bits and pieces will need to be removed or the script will throw errors. Which bits? Luckily we have good resources on dotnetnuke.com. Note this is where an Install script comes in handy if you already have a distributed module out there. The Azure installs are all new so you can suffice with a new release with a new install script which is Azure compliant. The older scripts which may break the install will never run.

Module Qualifier

In my previous post I mentioned how important it was to isolate your code into a subdirectory below DesktopModules/CompanyName. Here I will do the same regarding the SQL. Please use a module qualifier. It works just like the objectQualifier. It sits in front of all your tables, keys, views, functions, procedures, etc. It makes sure that your awesome “Gallery” module with its “Images” table doesn’t conflict with your competitors far inferior Gallery module with a table with the same name. Prefixing everything with a unique code reduces the risk of SQL clashes.

Naming conventions

It is generally a good idea to have a naming convention to stick to. So decide if you’re going to use Object plural for table names for instance. Or singular. But stick to it. I go with object plural. So the “Widgets” table contains a bunch of “Widget” objects. And then I have GetWidget, AddWidget, UpdateWidget and DeleteWidget as procedures to do my CRUD operations. This makes your code easy to read for yourself and others if need be. Note that there is also convention around the naming of keys (primary/foreign). These are often overlooked.

My script generation method

So now to how I create scripts in a way that avoids errors as much as possible. In a nutshell:

- The first script is generated by a tool and then tweaked by hand

- Upgrade scripts are crafted by hand with a logical internal order: first changes to tables and keys, then to views and functions, finally to stored procedures.

- Install and UnInstall scripts generated by a tool

The first scripts usually come out of some code generation (O/R mapping) tool. There are many out there. I use CodeSmith. These scripts provide the basis for the first install script. I’d create my objects in SQL for instance, and then generate the first SPROCs for the CRUD and FK operations. The CRUD operations are the Get/Add/Update/Delete methods. The FK operations are the ones that are easily parsed out by the foreign keys. So GetWidgetsByModule for instance. Typically when I’m happy with my object model I’ll generate this, add it to SQL and begin the hand work. It’s really like the potter who throws the first slab of clay on the wheel and creates the first shape. The details come in later.

The crafting of SQL of the upgrade scripts I consider something I don’t want tools to handle as I want to keep them under very close supervision. I will begin any script with any changes to tables like adding/removing columns. Then I’ll change/add any foreign keys. This concludes what I call the structure. Then I’ll move to the “code” bit which are things like functions and procedures. Here’s an example of this way of structuring the script. In 99% of cases it’s just tables, keys and procedures by the way. Procedures and functions are most like “code” and I craft these in SQL Management Studio as I’ll have the debugging capabilities of that tool. Then I’ll copy out the code to my script file and make sure all dbos and object qualifiers are replaced and I’ll test run the install under Host > SQL. For me this has proven the most robust method in the generation of stored procedures.

Under some circumstances I will deviate from the aforementioned pattern if it improves legibility of the script. This can be the case if I make a change that can be summarized under a simple banner (like “Add Commenting”) and the associated changes are isolated in my data model and the script part is concise. So what I’ve outlined is not cast in stone. The most important thing is that your upgrade scripts remain legible and logical in structure so that if you go back to them you can quickly navigate them to see if all is in order.

Upgrade scripts are also cast in concrete for me. If you keep changing these it becomes a nightmare when different users start upgrading with different versions of your module as you’ll be left wondering which scripts ran exactly. leaving them untouched removes this uncertainty. You’ll know that a user moving from version X to version Y will have run scripts A, B and C. Regardless of the releases he/she installed in between. So leave your upgrade scripts untouched in future versions. There are exceptions to this rule (see above) but for me it’s only permitted under really exceptional circumstances. If I do a diff between two module versions there should be no differences between the upgrade scripts! That would be a red flag for me.

A final word about the upgrade scripts: of course there is also a logical order from the point of view of SQL Server. This is why I’ve chosen the pattern of table changes first, then keys, then procedures. If you’d do it the other way around you’d get errors.

Automated script generation

You’re probably familiar with the concept of automated script generation. Basically you tell a tool to look at the difference between database A and B and generate the difference scripts. In our case the Install script is one way, the Uninstall script is the reverse way. What you’ll do is the following:

- Package your module with the current set of upgrade scripts

- Create two DNN installations with the same objectQualifier which can easily be parsed out

- Install the module to one of these two installations

- Run the script generation tool to generate the install and the uninstall scripts

- Search and replace dbo and the objectQualifier in the scripts

At some point in your DNN career you’ll feel the urge to automate the whole bloody thing. Personally I’ve used the Red Gate tools before but because of the way they generated the scripts (more geared to do full DB diffs and making it difficult to isolate to a module) and because I had issues with their licensing model, I decided to look at how hard it would be to roll my own. It turns out that with MSO (components that come with SQL Management Studio) you can get quite far and I’ve now created my own processor (in .NET) that generates the scripts exactly how I want them to be. Here is an example of using these components.

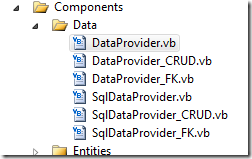

The data provider

Now that we have a rock solid database, we turn our attention to the data provider. So the DataProvider class and the SqlDataProvider class. OK, let’s keep it concise: if you’re doing all this by hand you’re doing it wrong. I mean: there are tons of code generation tools out there that will help you create these classes. I know there’s Entity Framework and it makes it all look so easy, but our way of doing things doesn’t need to be that hard. I use CodeSmith. But there are other code generators out there. Each with their own merits. What I’d typically do is at the start of the project I’ll run the generation once and incorporate that code in my project. Then, during the project, I’ll generate the data layer to some directory outside the main module directory and do a diff with what I have.

Another tip: make use of partial classes when using automated code generation. So my generated code goes to a couple files like “DataProvider_CRUD" for instance. Then, in my project I’d also have the “DataProvider” file which is the same class and hols hand crafted stuff. Now, when running a diff, I can easily import a new column into my project as I’m just comparing with the first file. It really makes life simpler that way.

SqlDataProvider specifics

The SqlDataProvider class holds the references to the database like the db owner and object qualifier and so on. I like to use this pattern when implementing the various SPROCs:

Public Overrides Function GetTextsByObject(ByVal ModuleId As Integer, ByVal ObjectId As Integer, ByVal Locale As String, ByVal Version As String) As IDataReader

Return CType(SqlHelper.ExecuteReader(ConnectionString, DatabaseOwner & ObjectQualifier & ModuleQualifier & "GetTextsByObject", ModuleId, ObjectId, Locale, Version), IDataReader)

End Function

You see the use of ModuleQualifier? That is a constant at the top of the class:

Private Const ModuleQualifier As String = "LocalizationEditor_"

Of course you are using a qualifier for your module, right? If not: see above. Note also that with this pattern your method name (in this case “GetTextsByObject”) is replicated throughout your data layer without any contamination of your module qualifier.

Also: you’re not creating the SqlDataProvider as a separate project, right? I mean MyAwesomeModule.SqlDataProvider.dll is soooo 2005. There is no need. I know very old modules do it, but you can just as well roll it into your main dll. The advantages are(1) you’ll avoid having another project in your solution, (2) you avoid tracking another file in your distribution and (3) your user’s DNN becomes a little bit more efficient. Regarding nr 2: ever had a customer come with some obscure error due to the fact that he had the main module dll with an older SqlDataProvider dll? These things happen. Keep the number of dlls to a minimum! And regarding 3: it came to light somewhere during DNN 4’s run that the amount of dlls had an impact on performance. Since then the rule has been: keep the number of dlls to a minimum. Which is, by the way, why I find it puzzling that I’ve seen DNN Corp recently adding more dlls unnecessarily to the project like DotNetNuke.Web.Client.dll, DotNetNuke.Web.dll, etc.

Final notes

Be sure to check out this year’s DNN World for a chance to meet me in person and ask me about this stuff.